PD43+ Validator

PD43+ explained

Public Document 43+, or PD43+ for short, is a searchable database of all elections (excluding municipal) held in Massachusetts since 1970, created by digitizing Public Document 43, the state's official election report (these reports are available in the State Library's archives, although not well-organized there). PD43+ makes it far easier to search for particular elections, extract their data into a parseable form, and collect large amounts of election data quickly, making it an invaluable resource for anyone looking to research information about Massachusetts elections, and I've used it for that purpose multiple times myself.

PD43+ Errors

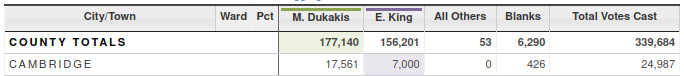

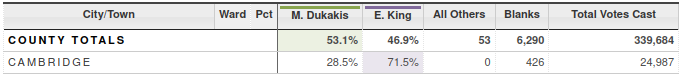

Unfortunately, the data in PD43+ is also plagued by thousands of inconsistencies, seemingly as a result of storing data with four distinct sources of truth that aren't checked for consistency (from what I can tell, without any access to the source code or raw data myself, and only being able to observe the website's behavior). For example, in the 1982 gubernatorial Democratic primary, in which ex-governor Michael Dukakis challenged incumbent Edward King (who had, himself, primaried Dukakis four years earlier), there's an inconsistency in the results for Cambridge that serves as a good demonstration of the issue (archive link, for if/when the site is fixed). In the past, when creating a map of the electoral shifts between the 1978 and 1982 primaries, I noticed a major outlier in Cambridge shifting towards King, despite the major statewide shift in the opposite direction; a look at the original source document revealed that, when entering the 1982 results, the vote counts for King and Dukakis in Cambridge were mistakenly swapped. I reached out to PD43+ through the state's election office's email address, and they fixed the error. However, they didn't correct two other pieces of information: the percentages each candidate received, and the actual winning candidate (which determines whose cell is highlighted).

Although Dukakis won Cambridge, King incorrectly has the highlight behind his cell indicating victory

The percents remain swapped; the correct values are 71.5% for Dukakis and 28.5% for King

Errors - technical details

The way data is transmitted through the HTML in PD43+ is using HTML classes. For example, this is the element of the data table representing that Dukakis tally in Cambridge:

And this is the element for the King tally, with some irrelevant style attributes removed:

Notice the classes number_17561 and percent_0-285004682 for Dukakis, and number_7000, percent_0-714995317, and winner for King. These classes are used by the page's JavaScript to render the highlight behind the winner's cell, and switch between percentages and vote counts when clicking the controls at the top of the table (although the initial vote counts and highlights are sent as part of the HTML). The problem is that these classes aren't calculated by the frontend for correctness relative to the actual vote counts, and aren't checked or calculated on the backend either; the only explanation I can see is that they're stored separately in the site's database.

Code outline

The Validator is implemented as a Python script. It starts at the main function, which parses the arguments using argparse to determine which elections to validate. It then uses PD43+'s own search page, parsing the HTML to extract the links to each election and add them to a queue; all HTTP requests were implemented using the Requests library, and HTML was parsed with Beautiful Soup 4. Using the queue, I downloaded the page for the election and parsed it to extract the relevant data (vote counts, percents, and winners according to the HTML classes, and a bit of metadata). I extracted the vote count from the inner HTML and the HTML classes separately, in case they drew on separate sources of truth, but they seem to use the same one.

Once the data was extracted, I analyzed it for a variety of inconsistences; a full list of error types is available here. In addition to checking the page itself, I also check for consistency between state-wide and county-wide pages on statewide elections, and check precinct-level data in CSV files for elections where it's available. This is the only place I look at precinct-level data; I don't use the API the actual page uses, because it would require far too many requests. Once the queue is emptied, the errors are collated if collation is enabled, and then exported to a file. Collation consolidates multiple errors of the same type in the same election into one, such as cases where every city has its total vote count erroneously set to 0. I also wrote some deployment infrastructure to help run the validator: a Bash script to run the validation on every office and produce output sorted by office, and Dockerfiles for greater portability.

Closing thoughts

The total number of errors (obtainable through wc -l error-data/* or wc -l error-data/*collated.txt divided by 2, since each error is printed on one line plus one blank separator line) is 11,209, or 3,500 with collation. This is relatively small compared to the tens of thousands of elections listed on PD43+, but large enough that manual fixes are infeasible. For that reason, I'd recommend changing the code to only use a single source of truth instead, fixing every issue I've found and possibly even some I haven't, although since I haven't seen the code myself I don't know how difficult it would actually be to change. It's important to note that my analysis only catches errors where PD43+ is inconsistent with itself; cases where it's consistent with itself, but not with the real result (such as the original scenario of Dukakis in Cambridge) won't be caught by this method. PD43+ is a great resource for researchers and casual browsers alike, so it's unfortunate that it's currently marred by these errors; my hope is that this analysis will help them to be fixed, and spur more interest in the topic.